Send Prompt

Send a text message (prompt) to ChatGPT.

Settings

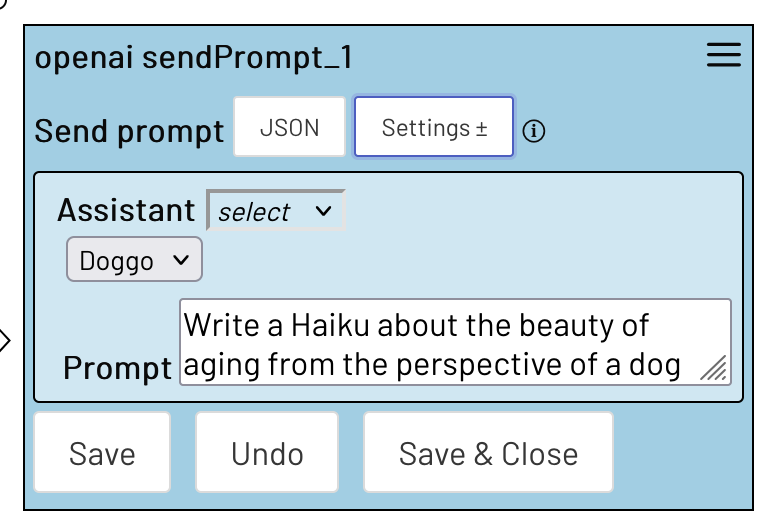

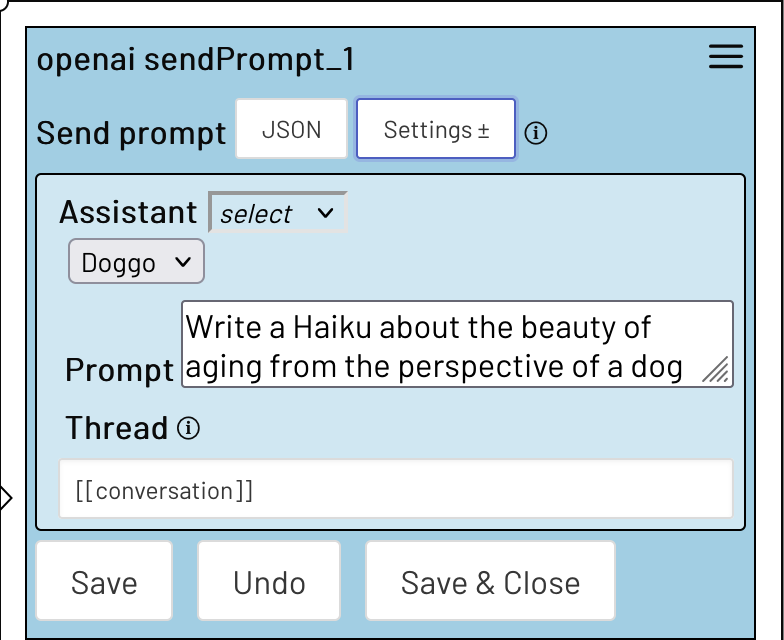

Assistant

The OpenAI assistant to which the message should be sent.

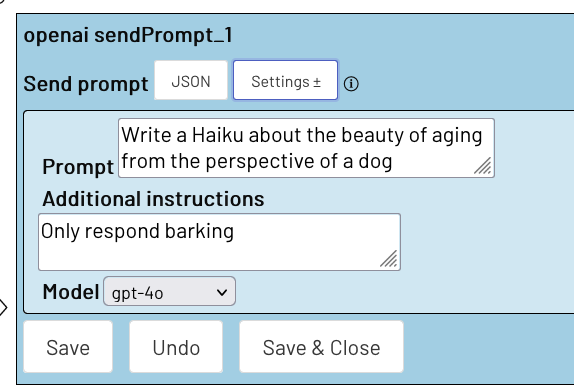

Prompt

The message to be sent

Additional Instructions

Information about how the model should react.

OpenAi distinguishes between 'developer', also known as 'system', messages and 'user' messages. In the plugin, no explicit distinction can be made between the roles. Instead, inputs under

promptare always evaluated asusermessages, while inputs underadditional instructionsare always evaluated asdevelopermessages. For more information see: openAi/docs/roles

Thread

Specify in which thread the message should be posted.

Only if you define an assistant and a thread can you really have conversations that build on each other. Otherwise, the model cannot remember any inputs/outputs.

Posting and returning a reply can take a long time depending on the internet speed. Please note that no parallel actions are possible in your session during this action.

Posting and returning a reply can take a long time depending on the internet speed. Please note that no parallel actions are possible in your session during this action.

As long as you are posting in a thread, it cannot be used from another session. It is your responsibility to prevent this. E.g. with the on Error action.

Model

Specify which model you want to work with. If you make a request without an assitant, you must specify a model. Otherwise, the setting will be taken from your respective assistant.

More about this under: openAi/docs/models

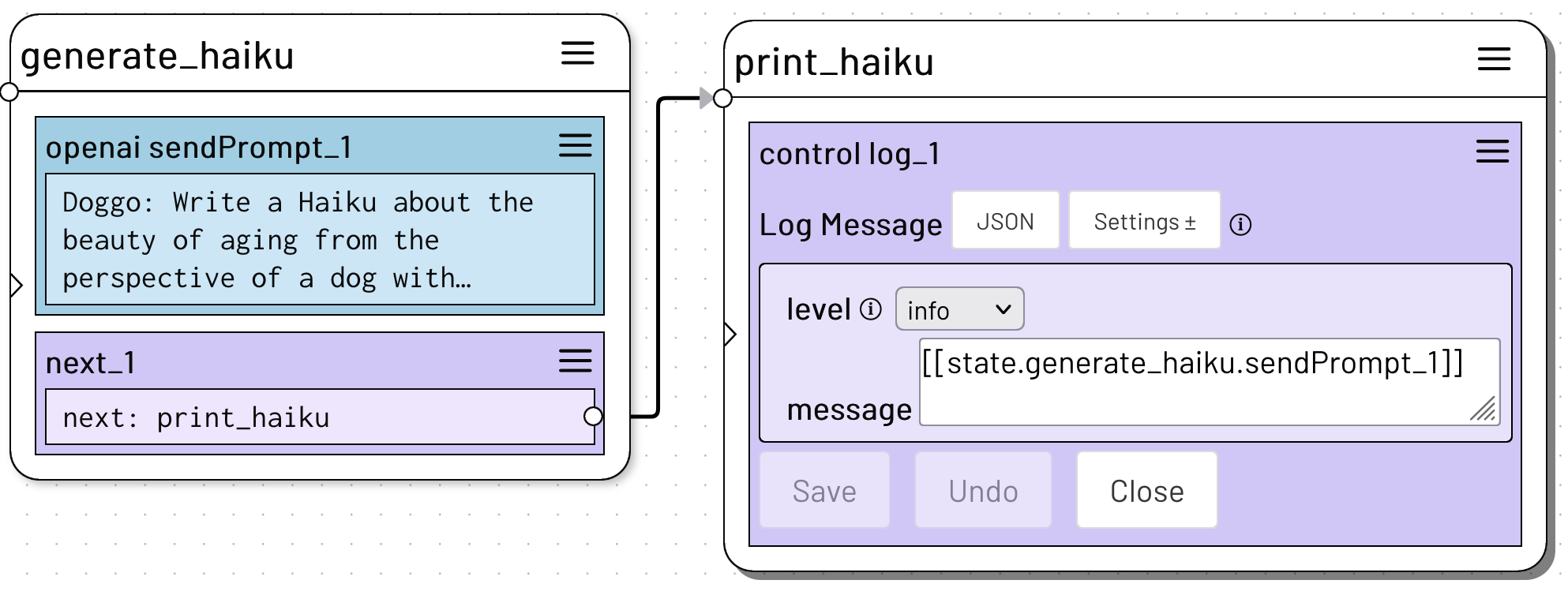

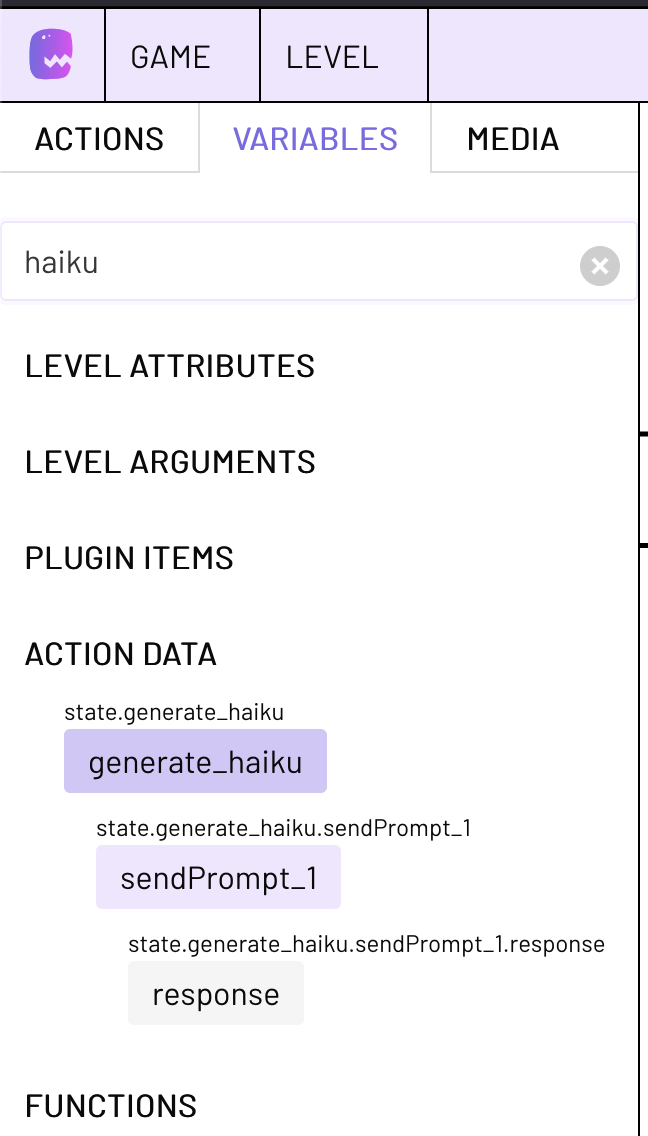

Variables

You can access the last received response to a prompt via the Send Prompt action data variable. Use for example:

[[state.MyState.sendPrompt_1.response]]

To access the last received response to a prompt of the top-level Send Prompt action in the state MyState.

response

The response of your request.

Examples

Depending on which combination of settings you select, the action behaves slightly differently.

The most direct variant is to define the prompt and the corresponding instructions directly in the action. In this variant, however, the model must also be selected explicitly.

In the next variant, an assistant has been selected, but no thread has been defined. Here too, the conversation is not saved, but the information about the assistant from its settings is attached to the request in the background so that the personality you have defined is retained. It is still possible to attach further instructions via the corresponding action setting.

A persistent conversation is only possible if an assistant and a valid thread have been specified.

To call up the response to your request, look in the menu bar on the left under Variables and search for your state.

You can then process the response further